The AI that Learned how to Cheat and Hide Data from it's Creators

- font size decrease font size increase font size

The AI that learned how to cheat and hide data from its creators

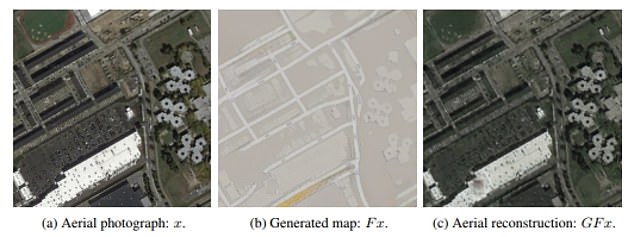

- AI was trained to transform aerial images into street maps and then back again

- They found that details omitted in final image reappeared when it was reverted

- It used steganography to 'hide' data in the image and recover the original photo

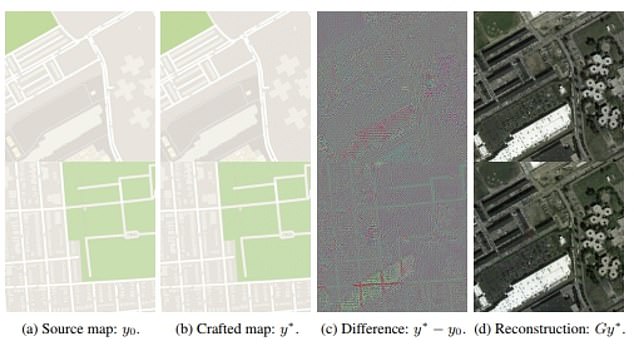

New research from Stanford and Google has shown that it's possible artificial intelligence software may be getting too clever. The neural network, called CycleGAN, was trained to transform aerial images into street maps, then back into aerial images. Researchers were surprised when they discovered that details omitted in the final product reappeared when they told the AI to revert back to the original image.

Stanford and Google researchers were surprised when they discovered that details omitted in the final product reappeared when they told the AI to revert back to the original image. For example, skylights on a roof that were absent from the final product suddenly reappeared when they returned to the original image, according to TechCrunch.

'CycleGAN learns to "hide" information about a source image into the images it generates in a nearly imperceptible, high-frequency signal,' the study states. 'This trick ensures that the generator can recover the original sample and thus satisfy the cyclic consistency requirement, while the generated image remains realistic.'

What ended up happening is that the AI figured out how to replicate details in a map by picking up on the subtle changes in color that the human eye can't detect, but that the computer can pick up on, TechCrunch noted. In effect, it didn't learn how to create a copy of the map from scratch, it just replicated the features of the original into the noise patterns of the other.

For example, skylights on a roof that were absent from the aerial reconstruction suddenly reappeared when they returned to the original image, or the aerial photograph labeled (a)

Researchers found the AI figured out how to replicate details in a map by picking up on the subtle changes in color that the human eye can't detect, but that the computer can pick up on. The researchers say the AI ended up being a 'master of steganography,' or the practice of encoding data in images. CycleGAN was able to pick up information from the original source map and then encode it in the reconstructed image. By doing that, it enables the AI to be able to recover the original image with precise accuracy. However, it means that the AI was using steganography to avoid actually learning how to perform the requested task in order to speed up the process, TechCrunch noted.